Matrices: A beginner's guide

Contents

- 1 Matrices as Operations on Quantum States

- 2 Matrices

- 2.1 Basic Definition and Representations

- 2.2 Matrix Addition

- 2.3 Multiplying a Matrix by a Number

- 2.4 Multiplying two Matrices

- 2.5 Notation

- 2.6 The Identity Matrix

- 2.7 Complex Conjugate

- 2.8 Transpose

- 2.9 Hermitian Conjugate

- 2.10 The Inverse of a Matrix

- 2.11 Hermitian Matrices

- 2.12 Unitary Matrices

- 2.13 Inner and Outer Products

- 2.14 Unitary Matrices

Matrices as Operations on Quantum States

The states of a quantum system can be written as

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert{\psi}\right\rangle = \alpha_0\left\vert{0}\right\rangle + \alpha_1\left\vert{1}\right\rangle,} | (m.1) |

where Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_0\,\!} and Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \alpha_1\,\!} are complex numbers. These states are used to represent quantum systems that can be used to store information. Because Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |\alpha_0|^2\,\!} and Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle |\alpha_1|^2 \,\!} are probabilities and must add up to one,

| (m.2) |

This means that this vector is normalized, i.e. its magnitude (or length) is one. (Appendix B contains a basic introduction to complex numbers.) The basis vectors for such a space are the two vectors and which are called computational basis states. These two basis states are represented by

| (m.3) |

Thus, the qubit state can be rewritten as

| (m.4) |

A very common operation in computing is to change a to a and a to a . The operation that does this is denoted a . This operator does both. It changes a and to . So we write,

| (m.1) |

Notice that this means that acting with again means that you get back the original state. Matrices, which are arrays of numbers, are the mathematical incarnation of these operations. It turns out that matrices are the way to represent almost all of operations in quantum computing and this will be shown in this section.

Let us list some important matrices that will be used as examples below:

| (m.1) |

| (m.2) |

| (m.3) |

| (m.3) |

These all have the general form

| (m.4) |

where the numbers can be complex numbers.

Matrices

Basic Definition and Representations

A matrix is an array of numbers of the following form with columns, col. 1, col. 2, etc., and rows, row 1, row 2, etc. The entries for the matrix are labeled by the row and column. So the entry of a matrix will be where is the row and is the column where the number is found. This is how it looks:

Notice that we represent the whole matrix with a capital letter . The matrix has rows and columns, so we say that is an matrix. We could also represent it using all of the entries, this array of numbers seen in the equation above. Another way to represent it is to write it as . By this we mean that it is the array of numbers in the parentheses.

Examples

The matrix above,

| (m.1) |

is a matrix.

The matrix

| (m.1) |

is and

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle K = \left(\begin{array}{cc} 2 & 5 \\ 1 & 4 \\ 7 & 0 \\ 8 & 3\end{array}\right), \,\!} | (m.1) |

is a Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 4\times 2} matrix.

Matrix Addition

Matrix addition is performed by adding each element of one matrix with the corresponding element in another matrix. Let our two matrices be Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A} as above, and Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle B} . To represent these in an array,

The the sum, which we could call Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle C= A+B}

is given by

In other words, the sum gives Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle c_{11} = a_{11} + b_{11}\,\!} , etc. We add them component by component like we do vectors.

Change the font color or type in order to highlight the entries that are being added.

Multiplying a Matrix by a Number

When multiplying a matrix by a number, each element of the matrix gets multiplied by that number. Seem familiar? This is what was done for vectors.

Let Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle k} be some number. Then

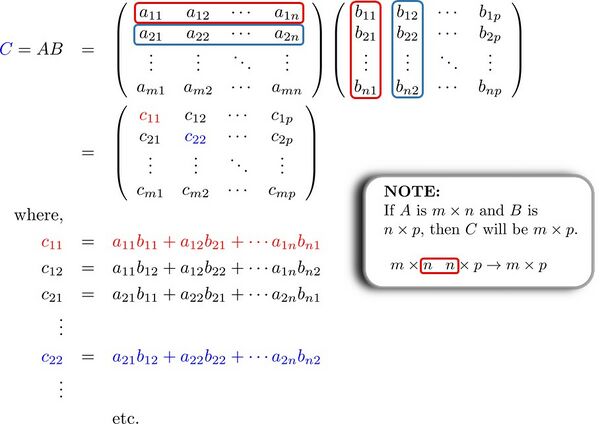

Multiplying two Matrices

The the product, which we could call Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle C= AB} is given by

Examples

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A = \left(\begin{array}{cc} 2 & 3 \\ 5 & 6 \end{array}\right), \mbox{ and } B = \left(\begin{array}{cc} 1 & 4 \\ 7 & 2 \end{array}\right) \,\!} | (m.1) |

Then

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A B = \left(\begin{array}{cc} 2 & 3 \\ 5 & 6 \end{array}\right)\left(\begin{array}{cc} 1 & 4 \\ 7 & 2 \end{array}\right) = \left(\begin{array}{cc} 2\cdot 1 + 3\cdot 7 & 2\cdot 4 + 3\cdot 2 \\ 5\cdot 1 + 6\cdot 7 & 5\cdot 4 + 6\cdot 2 \end{array}\right) = \left(\begin{array}{cc} 23 & 14 \\ 47 & 32 \end{array}\right), \,\!} | (m.1) |

Let us multiply Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X} and Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle Z} from above,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle X Z = \left(\begin{array}{cc} 0 & 1 \\ 1 & 0 \end{array}\right) \left(\begin{array}{cc} 1 & 0 \\ 0 & -1 \end{array}\right) = \left(\begin{array}{cc} 0\cdot 1 + 1\cdot 0 & 0\cdot 0 + 1\cdot (-1) \\ 1\cdot 1 + 0\cdot 0 & 1\cdot 0 + 0\cdot (-1) \end{array}\right) = \left(\begin{array}{cc} 0 & -1 \\ 1 & 0 \end{array}\right), \,\!} | (m.1) |

It is helpful to notice that this is Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle -iY} ; that is Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle XZ = -i Y} .

Notation

There are many aspects of linear algebra that are quite useful in quantum mechanics. We will briefly discuss several of these aspects here. First, some definitions and properties are provided that will be useful. Some familiarity with matrices will be assumed, although many basic definitions are also included.

Let us denote some Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m\times n\,\!}

matrix by Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A\,\!}

. The set of all Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m\times n\,\!}

matrices with real entries is Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle M(m\times n,\mathbb{R})\,\!}

. Such matrices

are said to be real since they have all real entries. Similarly, the

set of Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle m\times n\,\!}

complex matrices is Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle M(m\times n,\mathbb{C})\,\!}

. For the

set of square Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times n\,\!}

complex matrices, we simply write

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle M(n,\mathbb{C})\,\!}

.

We will also refer to the set of matrix elements, Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{ij}\,\!}

, where the

first index (Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle i\,\!}

in this case) labels the row and the second Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (j)\,\!}

labels the column. Thus the element Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{23}\,\!}

is the element in the

second row and third column. A comma is inserted if there is some

ambiguity. For example, in a large matrix the element in the

2nd row and 12th

column is written as Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle a_{2,12}\,\!}

to distinguish between the

21st row and 2nd column.

The Identity Matrix

An identity matrix has the property that when it is multiplied by any matrix, that matrix is unchanged. That is, for any matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A\,\!} ,

Such an identity matrix always has ones along the diagonal and zeroes everywhere else. For example, the Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3 \times 3\,\!} identity matrix is

It is straight-forward to verify that any Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3 \times 3\,\!} matrix is not changed when multiplied by the identity matrix.

Complex Conjugate

The complex conjugate of a matrix is the matrix with each element replaced by its complex conjugate. In other words, to take the complex conjugate of a matrix, one takes the complex conjugate of each entry in the matrix. We denote the complex conjugate with a star, like this: Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^*\,\!} . For example,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} A^* &=& \left(\begin{array}{ccc} a_{11} & a_{12} & a_{13} \\ a_{21} & a_{22} & a_{23} \\ a_{31} & a_{32} & a_{33} \end{array}\right)^* \\ &=& \left(\begin{array}{ccc} a_{11}^* & a_{12}^* & a_{13}^* \\ a_{21}^* & a_{22}^* & a_{23}^* \\ a_{31}^* & a_{32}^* & a_{33}^* \end{array}\right). \end{align} \,\!} | (C.2) |

(Notice that the notation for a matrix is a capital letter, whereas the entries are represented by lower case letters.)

Transpose

The transpose of a matrix is the same set of elements, but now the first row becomes the first column, the second row becomes the second column, and so on. Thus the rows and columns are interchanged. For example, for a square Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3\times 3\,\!} matrix, the transpose is given by

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} A^T &=& \left(\begin{array}{ccc} a_{11} & a_{12} & a_{13} \\ a_{21} & a_{22} & a_{23} \\ a_{31} & a_{32} & a_{33} \end{array}\right)^T \\ &=& \left(\begin{array}{ccc} a_{11} & a_{21} & a_{31} \\ a_{12} & a_{22} & a_{32} \\ a_{13} & a_{23} & a_{33} \end{array}\right). \end{align} \,\!} | (C.3) |

Hermitian Conjugate

The complex conjugate and transpose of a matrix is called the Hermitian conjugate, or simply the dagger of a matrix. It is called the dagger because the symbol used to denote it, (Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \dagger\,\!} ):

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (A^T)^* = (A^*)^T \equiv A^\dagger. \,\!} | (C.4) |

For our Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3\times 3\,\!} example,

If a matrix is its own Hermitian conjugate, i.e. Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^\dagger = A\,\!} , then we call it a Hermitian matrix. (Clearly this is only possible for square matrices.) Hermitian matrices are very important in quantum mechanics since their eigenvalues are real. (See Sec.(Eigenvalues and Eigenvectors).)

of a matrix is the sum of the diagonal

elements and is denoted Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mbox{Tr}\,\!} . So for example, the trace of an Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times n\,\!} matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A\,\!} is

Some useful properties of the trace are the following:

- Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mbox{Tr}(AB) = \mbox{Tr}(BA)\,\!}

- Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mbox{Tr}(A + B) = \mbox{Tr}(A) + \mbox{Tr}(B)\,\!} .

Using the first of these results,

This relation is used so often that we state it here explicitly. -->

of a Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2\times 2\,\!}

matrix,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N = \left(\begin{array}{cc} a & b \\ c & d \end{array}\right), \,\!} | (C.5) |

is given by

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \det(N) = ad-bc. \,\!} | (C.6) |

Higher-order determinants can be written in terms of smaller ones in a recursive way. For example, let

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle M = \left(\begin{array}{ccc} m_{11} & m_{12} & m_{13} \\ m_{21} & m_{22} & m_{23} \\ m_{31} & m_{32} & m_{33}\end{array}\right). \,\!}

Then

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \det(M) = m_{11}\det\left(\begin{array}{cc} m_{22} & m_{23} \\ m_{32} & m_{33} \end{array}\right) - m_{12}\det\left(\begin{array}{cc} m_{21} & m_{23} \\ m_{31} & m_{33} \end{array}\right) +m_{13}\det\left(\begin{array}{cc} m_{21} & m_{22} \\ m_{31} & m_{32} \end{array}\right). \,\!}

The determinant of a matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A\,\!} can be also be written in terms of its components as

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \det(A) = \sum_{i,j,k,l,...} \epsilon_{ijkl...} a_{1i}a_{2j}a_{3k}a_{4l} ..., \,\!} | (C.7) |

where the symbol

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon_{ijkl...} = \begin{cases} +1, \; \mbox{if } \; ijkl... = 1234... (\mbox{in order, or any even number of permutations}),\\ -1, \; \mbox{if } \; ijkl... = 2134... (\mbox{or any odd number of permutations}),\\ \;\;\; 0, \; \mbox{otherwise}, \; (\mbox{meaning any index is repeated}). \end{cases} \,\!} | (C.8) |

Let us consider the example of the Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3\times 3\,\!} matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A\,\!} given above. The determinant can be calculated by

where, explicitly,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon_{ijk} = \begin{cases} +1, \;\mbox{if }\; ijk= 123,231,\; \mbox{or}\; 312, (\mbox{These are even permutations of }123),\\ -1, \;\mbox{if }\; ijk = 213,132,\;\mbox{or}\;321(\mbox{These are odd permuations of }123),\\ \;\;\; 0, \; \mbox{otherwise}, \; (\mbox{meaning any index is repeated}), \end{cases} \,\!} | (C.9) |

so that

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \det(A) &=& \epsilon_{123}a_{11}a_{22}a_{33} +\epsilon_{132}a_{11}a_{23}a_{32} +\epsilon_{231}a_{12}a_{23}a_{31} \\ &&+\epsilon_{213}a_{12}a_{21}a_{33} +\epsilon_{312}a_{13}a_{21}a_{32} +\epsilon_{321}a_{13}a_{22}a_{31}. \end{align} \,\!} | (C.10) |

Now given the values of Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \epsilon_{ijk}\,\!} in Eq. C.9, this is

The determinant has several properties that are useful to know. A few are listed here:

- The determinant of the transpose of a matrix is the same as the determinant of the matrix itself:

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \det(A) = \det(A^T).\,\!} - The determinant of a product is the product of determinants:

Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \det(AB) = \det(A)\det(B).\,\!}

From this last property, another specific property can be derived. If we take the determinant of the product of a matrix and its inverse, we find

since the determinant of the identity is one. This implies that

-->

The Inverse of a Matrix

The inverse of an Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times n\,\!} square matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A\,\!} is another Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times n\,\!} square matrix, denoted Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle A^{-1}\,\!} , such that

where Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \mathbb{I}\,\!} is the identity matrix consisting of zeroes everywhere except the diagonal, which has ones. For example, the Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 3\times 3\,\!} identity matrix is

It is important to note that a matrix is invertible if and only if its determinant is nonzero. Thus one only needs to calculate the determinant to see if a matrix has an inverse or not.

Hermitian Matrices

Hermitian matrices are important for a variety of reasons; primarily, it is because their eigenvalues are real. Thus Hermitian matrices are used to represent density operators and density matrices, as well as Hamiltonians. The density operator is a positive semi-definite Hermitian matrix (it has no negative eigenvalues) that has its trace equal to one. In any case, it is often desirable to represent Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N\times N\,\!} Hermitian matrices using a real linear combination of a complete set of Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N\times N\,\!} Hermitian matrices. A set of Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N\times N\,\!} Hermitian matrices is complete if any Hermitian matrix can be represented in terms of the set. Let Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \{\lambda_i\}\,\!} be a complete set. Then any Hermitian matrix can be represented by Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \sum_i a_i \lambda_i\,\!} . The set can always be taken to be a set of traceless Hermitian matrices and the identity matrix. This is convenient for the density matrix (its trace is one) because the identity part of an Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle N\times N\,\!} Hermitian matrix is Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle (1/N)\mathbb{I}\,\!} if we take all others in the set to be traceless. For the Hamiltonian, the set consists of a traceless part and an identity part where identity part just gives an overall phase which can often be neglected.

One example of such a set which is extremely useful is the set of Pauli matrices. These are discussed in detail in Chapter 2 and in particular in Section 2.4.

Unitary Matrices

A unitary matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U\,\!} is one whose inverse is also its Hermitian conjugate, Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U^\dagger = U^{-1}\,\!} , so that

If the unitary matrix also has determinant one, it is said to be a special unitary matrix. The set of Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times n\,\!} unitary matrices is denoted Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U(n)\,\!} and the set of special unitary matrices is denoted Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle SU(n)\,\!} .

Unitary matrices are particularly important in quantum mechanics because they describe the evolution of quantum states. They have this ability due to the fact that the rows and columns of unitary matrices (viewed as vectors) are orthonormal. (This is made clear in an example below.) This means that when they act on a basis vector of the form

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert j\right\rangle = \left(\begin{array}{c} 0 \\ 0 \\ \vdots \\ 1 \\ \vdots \\ 0 \end{array}\right), \,\!} | (C.11) |

with a single 1, in say the Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle j} th spot, and zeroes everywhere else, the result is a normalized complex vector. Acting on a set of orthonormal vectors of the form given in Eq.(C.11) will produce another orthonormal set.

Let us consider the example of a Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2\times 2\,\!} unitary matrix,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U = \left(\begin{array}{cc} a & b \\ c & d \end{array}\right). \,\!} | (C.12) |

The inverse of this matrix is the Hermitian conjugate,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U ^{-1} = U^\dagger = \left(\begin{array}{cc} a^* & c^* \\ b^* & d^* \end{array}\right), \,\!} | (C.13) |

provided that the matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U\,\!} satisfies the constraints

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} |a|^2 + |b|^2 = 1, \; & \; ac^*+bd^* =0 \\ ca^*+db^*=0, \; & \; |c|^2 + |d|^2 =1, \end{align}\,\!} | (C.14) |

and

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} |a|^2 + |c|^2 = 1, \; & \; ba^*+dc^* =0 \\ b^*a+d^*c=0, \; & \; |b|^2 + |d|^2 =1. \end{align}\,\!} | (C.15) |

Looking at each row as a vector, the constraints in Eq.(C.14) are the orthonormality conditions for the vectors forming the rows. Similarly, the constraints in Eq.(C.15) are the orthonormality conditions for the vectors forming the columns.

Inner and Outer Products

It is very helpful to note that a column vector with Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times 1\,\!} matrix. A row vector is a Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 1\times n\,\!} matrix.

Now that we have a definition for the Hermitian conjugate, consider the

case for a Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times 1\,\!}

matrix, i.e. a vector. In Dirac notation, this is

The Hermitian conjugate comes up so often that we use the following notation for vectors:

This is a row vector and in Dirac notation is denoted by the symbol Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\langle\cdot \right\vert\!} , which is called a bra. Let us consider a second complex vector,

The inner product between Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert\psi\right\rangle\,\!} and Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert\phi\right\rangle\,\!} is computed as follows:

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} \left\langle\phi\mid\psi\right\rangle & \equiv (\left\vert\phi\right\rangle)^\dagger\left\vert\psi \right\rangle \\ &= (\gamma^*,\delta^*) \left(\begin{array}{c} \alpha \\ \beta \end{array}\right) \\ &= \gamma^*\alpha + \delta^*\beta. \end{align} } | (C.16) |

The vector Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left|\cdot \right\rangle\!} is called a ket. When you put a bra together with a ket, you get a bracket. This is the origin of the terms.

The outer product between these same two vectors is

This type of product is also called a Kronecker product or a tensor product. Vectors and matrices can be considered special cases of the more general class of tensors. A tensor can have any number of indices indicating rows, columns, and depth, for the case of a three index tensor.

Unitary Matrices

A unitary matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U\,\!} is one whose inverse is also its Hermitian conjugate, Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U^\dagger = U^{-1}\,\!} , so that

If the unitary matrix also has determinant one, it is said to be a special unitary matrix. The set of Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle n\times n\,\!} unitary matrices is denoted Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U(n)\,\!} and the set of special unitary matrices is denoted Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle SU(n)\,\!} .

Unitary matrices are particularly important in quantum mechanics because they describe the evolution of quantum states. They have this ability due to the fact that the rows and columns of unitary matrices (viewed as vectors) are orthonormal. (This is made clear in an example below.) This means that when they act on a basis vector of the form

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \left\vert j\right\rangle = \left(\begin{array}{c} 0 \\ 0 \\ \vdots \\ 1 \\ \vdots \\ 0 \end{array}\right), \,\!} | (C.11) |

with a single 1, in say the Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle j} th spot, and zeroes everywhere else, the result is a normalized complex vector. Acting on a set of orthonormal vectors of the form given in Eq.(C.11) will produce another orthonormal set.

Let us consider the example of a Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle 2\times 2\,\!} unitary matrix,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U = \left(\begin{array}{cc} a & b \\ c & d \end{array}\right). \,\!} | (C.12) |

The inverse of this matrix is the Hermitian conjugate,

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U ^{-1} = U^\dagger = \left(\begin{array}{cc} a^* & c^* \\ b^* & d^* \end{array}\right), \,\!} | (C.13) |

provided that the matrix Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle U\,\!} satisfies the constraints

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} |a|^2 + |b|^2 = 1, \; & \; ac^*+bd^* =0 \\ ca^*+db^*=0, \; & \; |c|^2 + |d|^2 =1, \end{align}\,\!} | (C.14) |

and

| Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \begin{align} |a|^2 + |c|^2 = 1, \; & \; ba^*+dc^* =0 \\ b^*a+d^*c=0, \; & \; |b|^2 + |d|^2 =1. \end{align}\,\!} | (C.15) |

Looking at each row as a vector, the constraints in Eq.(C.14) are the orthonormality conditions for the vectors forming the rows. Similarly, the constraints in Eq.(C.15) are the orthonormality conditions for the vectors forming the columns.

Unitary matrices are very important because the preserve the magnitude of a complex vector. In other words, if if the magnitude of a vector is one, for example Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \big\vert\big\vert \left\vert\psi\right\rangle\big\vert\big\vert = 1\,\!} , then Failed to parse (MathML with SVG or PNG fallback (recommended for modern browsers and accessibility tools): Invalid response ("Math extension cannot connect to Restbase.") from server "https://wikimedia.org/api/rest_v1/":): {\displaystyle \big\vert\big\vert U\left\vert\psi\right\rangle \big\vert\big\vert = 1\,\!} .

Copyright

© Copyright 2022 BKR Collaboration