Appendix A - Basic Probability Concepts

Contents

Introduction

In this appendix definitions and some example calculations are presented which will aid in our discussions of quantum mechanics. This is not meant to be a comprehensive introduction to the topic. It is primarily meant to serve as a means for introducing notation and terminology.

Roughly, what we mean by probability is the chance of a certain event occurring from a set of events that could possibly occur. It can be defined as

| (A.1) |

There is also an experimental definition

| (A.2) |

If the number of trials was infinite, these would be the same. In practice, one can us a very large number of trials to get the two to be very close to one another.

There are some properties of probability distributions that are sometimes called axioms

- The sum of all probabilities is 1.

- Probabilities for mutually exclusive events add.

The first of these should be clear. Probabilities cannot be larger than one, or negative. If every possibility is taken into account, then the sum of all of these should be 1. The last of these will be discussed further below. It says that the probabilities for mutually exclusive events, that is, two events that cannot both occur, will add. So if event and cannot both happen at the same time, then the probability of both, denoted is equal to

Let us consider a very primitive example of probability, flipping a coin. Now, we know the set of possible outcomes is heads or tails, Since there are only two events that can occur and we know that there is an equal chance for them both to occur, we say that the probability for each occurring is i.e. and because the probabilities for every possible outcome of an event must equal i.e.

In probability, and can be somewhat counter intuitive at first. For instance, if someone were to tell you that they have 5 apples and just received 3 more, the operation that takes place in your head is apples. But, when working with probabilities, and corresponds with multiplication. For example, say the probability that Bob stays and works through his lunch hour is and the probability that Kathy stays and works through lunch is Now if I were to ask, "What is the probability that Bob and Kathy stay and work through lunch?", you would not want add the probabilities because This would imply that both will work through lunch, which doesn't make sense because we cannot guarantee, from the knowledge that we have, both will work through lunch. Instead, let us multiply their respective probabilities, Since the answer is lower than the probability for each individual, it makes much more sense because, intuitively, both probabilities are less than one, and the probability of both happening should be even lower.

Now that we have examined and, lets take a look at or. Or corresponds with addition, which follows directly from the condition that all probabilities for the outcomes of events must add up to Revisiting the example of flipping a coin, we see that the two possible outcomes that occur are you obtain heads or you obtain tails. This is the third condition for probabilities above. You cannot have both heads and tails. The two are mutually exclusive events. If one happens, the other cannot happen. Those types of probabilities add.

Sets of Events

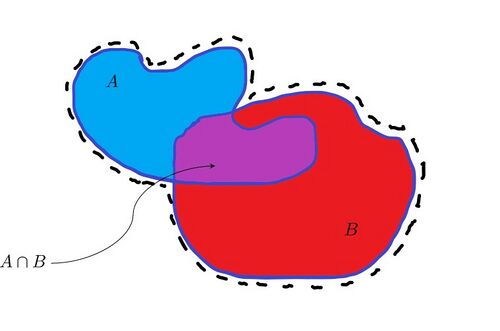

Figure A.1: Sets of events. The set includes the blue part and the purple part. The set includes the red part and the purple part. The set is only the purple part. The set includes the blue, the red, and the purple parts. (Everything enclosed by the dotted line.)

If and are mutually exclusive sets of events,

| (A.3) |

If the set are mutually exclusive, there is no elements in common and

In general,

| (A.4) |

The last term prevents over counting.

Example 1

In several of the examples, cards will be used. An ordinary deck of cards has 52 cards. There are 4 suits, clubs, spades, hearts and diamonds. The clubs and spades are black. The hearts and diamonds are red. Each suit has 13 cards in it: ace, the numbers 2-10, jack, queen and king.

Question: What is the probability of drawing a club or a jack from the set?

Answer: In this case there are 52 cards, each equally likely to be drawn from the deck. 13 cards are clubs. 4 cards are jacks (one from each suit). However, one jack is also a club. So there are 13 clubs and 3 other jacks for a total of 16 possible different cards that will be either a club or a jack. The probability is 16/52=4/13. But note that if is the set of clubs and is the set of jacks, then .

Conditional Probability

We will use the notation for the probability that both and occur. We will use of the probability that occurs given that already occurred. Then

| (A.4) |

So the conditional probability is

| (A.5) |

If , then the probability of does not depend on . The probabilities are called independent and

| (A.6) |

Example 2

Example 3

Example 4

Let 20 blue pens and 10 red pens be in a box all mixed up. Suppose we pull out a pen and it is red. This happens with probability . Now, So

or,

Mean, Median, and Variance

Example:(This example is a variation of one given by David Griffiths in Introduction to Quantum Mechanics (David J. Griffiths’ book [4]))

Suppose that in some room, there are four people with the following heights:

- 1 person is 1.5 meters tall

- 1 person is 1.6 meters tall

- 2 people are 1.8 meters tall

Let stand for the total number of people. We might write the number of people with certain heights as , , .

Now if I draw a name out of a hat that contains each person's name once, I will get the name of a person who is 1.6 meters tall with probability . (We assume that each person has a unique name and that it appears once and only once in the hat.) We write this as

and we would generally write for any value

Now since we are going to get someone's name when we draw, we must have

which is easy enough to check.

There are several aspects of this probability distribution that we might like to know. Here are some that are particularly useful:

- The most probable value (or mode) for the height is 1.8 meters.

- The median is 1.7 meters (two people above and two below).

- The average (or mean) is given by

| (A.1) |

Note that the mean and the median do not have to be the same. If there is an odd number of values, the median is the middle number in the list; if even, it is the mean of the two middle values. It is mere coincidence that they are the same here. The bracket, , is the standard notation for finding the average value of a function. This is done by calculating

For the average this is just

Note: The average value is called the expectation value in quantum mechanics. This can be misleading because it is not the most probable, nor is it ''what to expect.''

When one would like to discuss the properties of a particular probability distribution, describing it takes some effort. It is not enough to know the average, median, and most probable values; a lot of details of the probability distribution remain unknown to us if these are all we are given. What else would one like to know? Without describing it entirely, one may like to know more about the ''shape'' of the distribution. For example, how spread out is it?

The most important measure of this is the variance, which is the standard deviation squared ( ). The variance is defined as (in terms of our variable )

| (A.2) |

where . This can also be written as

| (A.3) |

Notation: Note that it is also very common to write the index as a subscript. In this case, the average value of the function, or event, can be written as

Stirling's Formula

For large , the following approximation is quite useful: